Engineering Insights is an ongoing blog series that gives a behind-the-scenes look into the technical challenges, lessons and advances that help our customers protect people and defend data every day. Our engineers write each post, explaining the process that led up to a Proofpoint innovation.

In modern threat detection, speed and adaptability are everything. Proofpoint’s global data pipeline processes billions of security events daily—from cloud logins to file transfers to mail activity. Detecting advanced threats in this constant stream of signals isn’t easy. Because it demands more than static logic. It requires rapid iteration, testing and improvement.

Today, many of our most advanced detections are powered by machine learning (ML) and AI models. These models identify subtle, high-risk behavioural patterns that traditional rule-based systems might miss. But developing and maintaining them comes with challenges. Analysts and data scientists need to train, test and compare multiple models simultaneously, often across different detection domains. In legacy environments, the process can be slow, costly and difficult to reproduce.

Traditional engineering lifecycles compound this challenge. Adding or updating a detector can take weeks of development and coordination. Production stability must be preserved, and analysts often rely on engineering teams for deployment. Experimentation is limited to shared environments, which makes it harder to test innovative logic or new models. As a result, valuable solutions take too long to reach our customers’ defences.

To solve these challenges, our engineering team built a modular orchestration platform tailored for developing, testing and optimising threat detection and enrichment pipelines.

This system enables analysts and data scientists to safely experiment with different detection approaches (including AI and ML models), share trained models across modules and quickly determine which configurations deliver the best results. It fundamentally changes how we build detectors. This allows our teams to innovate faster, validate performance with confidence and deploy new protections at unprecedented speed.

The challenge: scaling detection engineering

Proofpoint’s detection ecosystem spans a wide range of domains: account takeover (ATO), insider threats, post-access activity and cloud application security. Each of these relies on a set of detectors, enrichers and state creators operating across massive, distributed data sources.

Over time, it became increasingly complex to maintain and scale these systems. Analysts needed a way to:

- Build and test detectors without engineering dependencies

- Reproduce production data flows safely

- Understand and control costs for every experiment

- Monitor the health and reliability of the detectors

In short, we needed to make detection engineering as dynamic and composable as the threats we defend against.

That vision became the foundation for our modular orchestration platform—an internal system designed to bring cloud-scale automation, observability and control to every stage of the detection lifecycle.

The solution: modular orchestration for detection and enrichment

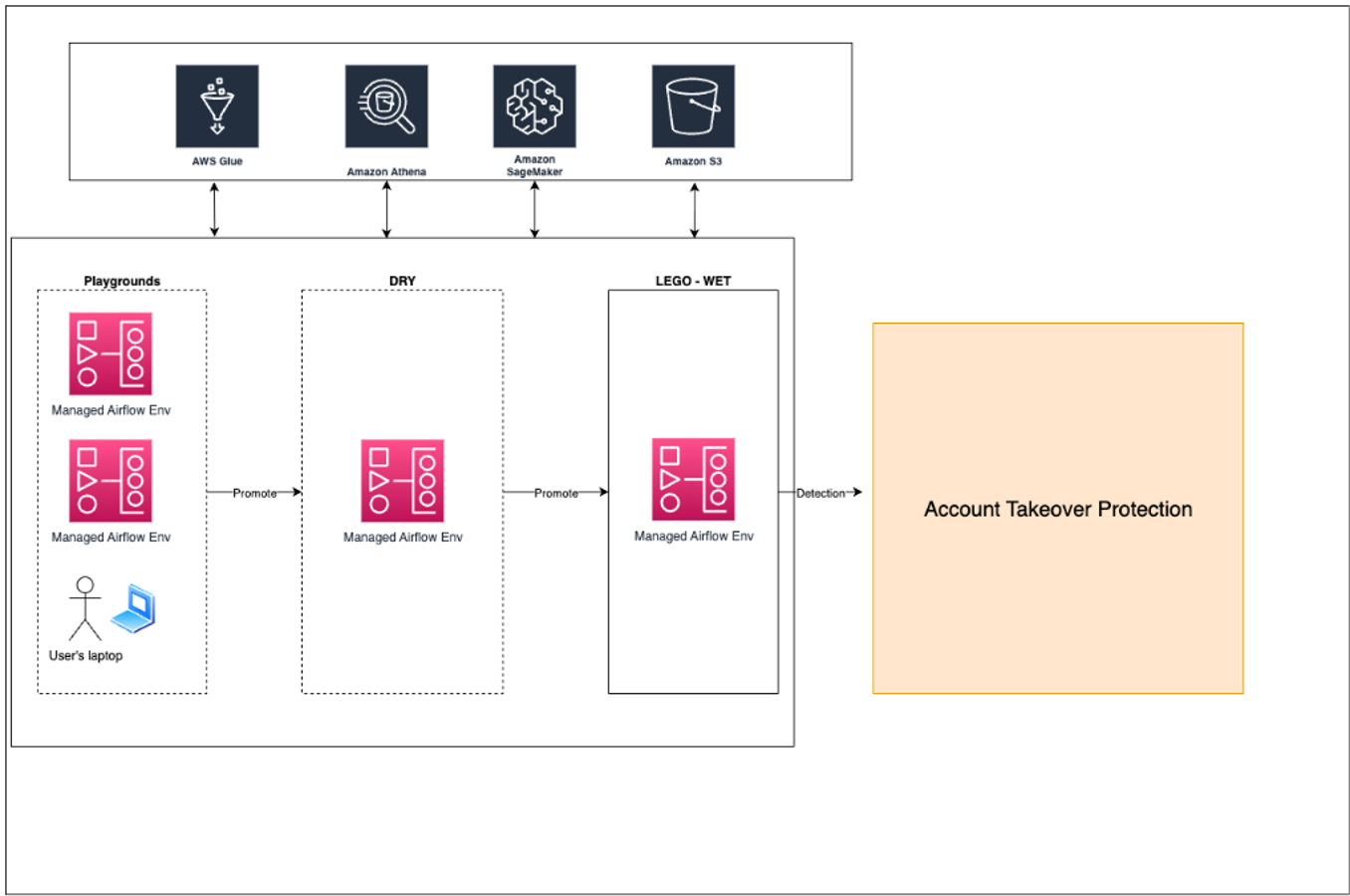

At its core, the platform provides an orchestration layer built on AWS Managed Airflow (MWAA) and integrated with Proofpoint’s existing data platform. Each data flow—called a module—is represented as an Airflow DAG that can read from or write to versioned data products on Amazon S3, using technologies like AWS Glue, Athena and Apache Iceberg.

These modules can represent:

- Detectors – Logic that flags suspicious behaviour or threat indicators

- Enrichers – Jobs that attach metadata, such as IP reputation, rarity scores or geographic context

- State creators – Background jobs that aggregate historical patterns to improve accuracy

Every DAG is fully composable, which means it can consume outputs from other modules. This allows us to build a layered data-processing graph across the Proofpoint detection ecosystem.

To ensure reliability and velocity, the system operates in three distinct tiers:

- Playground. A fully isolated environment for analysts to safely experiment with real data replicas. We can spin up as many Playgrounds as required.

- DRY. A shared staging layer where flows are validated and benchmarked.

- WET. The production-grade environment that emits validated detections into the cloud data loss prevention (cloud DLP), ATO and other downstream systems

This structure gives analysts freedom to innovate while maintaining strong operational boundaries. Data access, cost controls and quality checks are all managed automatically through SDK-enforced best practices, such as mandatory TTLs for written data and automatic Glue table creation for every dataset.

Figure 1. Overview of the architecture of our modular orchestration solution.

Built-in intelligence and observability

The platform also includes a robust monitoring and governance layer. Integrations with Grafana and PagerDuty provide real-time visibility into Airflow health, flow performance and cost utilisation. When a flow fails, automated alerts send detailed logs via Teams and email. This ensures immediate triage and recovery.

Anomaly detection is also applied to monitor:

- Athena query costs – Preventing unexpected spend

- Flow spamming – Identifying loops or runaway detectors

- Detection health – Tracking consistency of detection volumes and data quality

This embedded observability makes each data flow both autonomous and accountable. This is critical for operating a system that manages thousands of detectors across multiple regions.

Case study: accelerating delivery of advanced ATO detection

The impact of this orchestration platform is best illustrated by how it transformed the development lifecycle for Session Hijack, one of our most advanced ATO detectors.

This detector identifies potentially compromised user sessions by analysing unusual combinations of IP addresses, browsers, operating systems and authentication patterns. It correlates events within short time windows to surface suspicious activity that’s indicative of session takeovers.

Before this platform existed, it could take months to deliver a detector like Session Hijack into production. Analysts would design the logic, request engineering support for data ingestion and orchestration, and navigate multiple deployment cycles across testing and staging environments. Each adjustment required additional engineering time, which further delayed the moment when new protection reached customers.

With this modular platform, the same type of detector can move from concept to production in just a few days. Analysts can independently develop, test and refine the logic in isolated environments replicating production conditions. Meanwhile, built-in automation handles deployment and validation.

The result: faster innovation, quicker protection for our customers and a more efficient collaboration loop between detection research and engineering.

Collaboration and cost efficiency at scale

One of the most powerful aspects of the platform is how it bridges research and engineering. Analysts can operate independently. However, they can still commit code to shared repositories with all flows version-controlled and auditable in Git. Rollbacks are instant. And every DAG execution is traceable—down to the data product it read or wrote.

At the same time, cost tracking is built in. This ensures that experimentation doesn’t sacrifice efficiency. Data written to S3 must specify time-to-live (TTL), ensuring automatic cleanup. Athena query costs and S3 usage metrics feed into dashboards that surface optimisation opportunities.

This balance between freedom and control has allowed Proofpoint to scale the number of active detectors dramatically while maintaining predictable costs and operational stability.

Redefining how Proofpoint engineers detect threats

The modular orchestration platform represents more than just infrastructure—it’s a shift in how we innovate. By empowering analysts to experiment safely, validate rapidly and deploy confidently, Proofpoint has redefined what agility means in the context of large-scale threat detection.

It has shortened development cycles from months to days, strengthened collaboration between research and engineering, and ensured that every new insight can quickly translate into stronger customer protection.

At Proofpoint, we believe the ability to adapt as fast as adversaries evolve is the cornerstone of modern cybersecurity. This platform helps us do exactly that—continuously improving the speed, precision and resilience of our detections across the global threat landscape.

Join the team

At Proofpoint, our people—and the diversity of their lived experiences and backgrounds—are the driving force behind our success. We have a passion for protecting people, data and brands from today’s advanced threats and compliance risks.

We hire the best people in the business to:

- Build and enhance our proven security platform

- Blend innovation and speed in a constantly evolving cloud architecture

- Analyse new threats and offer deep insight through data-driven intelligence

- Collaborate with our customers to help solve their toughest cybersecurity challenges

If you’re interested in learning more about career opportunities at Proofpoint, visit the careers page.

About the authors

Guy Sela

Guy Sela is a principal engineer and chief architect on Proofpoint’s Cloud DLP and ATO teams. With over 19 years of experience across diverse software domains—including founding his own poker-training software company—he brings a passion for building scalable, impactful solutions. He lives in Austin, Texas, with his wife, Alejandra, and their son, Oz.

Sarah Skutch Freedman

Sarah is a software-slash-data engineer at Proofpoint. She loves the challenge of balancing cost and efficiency to solve complex problems at scale. When not optimizing data pipelines, she enjoys travelling and is addicted to caffeine and Crossfit.

Sharon Rozinsky

Sharon Rozinsky is an experienced software engineer who specializes in backend and data engineering. He is passionate about designing and building large-scale systems that create meaningful impact for end users. Outside of work, he enjoys bouldering, cycling, and building Lego creations with his two kids.

Nir Shneor

Nir Shneor is a tech leader with deep roots in software architecture and backend engineering. He is experienced in leading teams in startup environments, building products from the ground up, and scaling technical organizations. Passionate about mentoring others, Nir enjoys solving complex problems and exploring nature through hiking. He is a proud father of three boys and a forward-thinking visionary.