This blog is the first of a two-part series on generative AI. In part two, we’ll discuss how Proofpoint can help you protect your data in generative AI like ChatGPT.

Generative AI is everywhere in the news these days. And, as with the release of any new revolutionary technology, there’s a lot of hype. So we thought we’d cover some of the basics—without all the hoopla—for those of you who are just getting up to speed.

In this article, we’ll cover what generative AI is and why it promises to change the way we all work. We’ll also explore how people are already using it. Knowing all this is key to understanding why it puts corporate data at risk, why there is a push to regulate it and why the FTC just launched an investigation of ChatGPT.

What you should know about generative AI

Generative AI is a form of artificial intelligence (AI) that involves the use of deep learning techniques to create new content that’s similar to the content the AI models were trained on. This new content can include anything from text to images, music, video and code.

Large amounts of data and complex algorithms are typically used to train generative AI models. This gives them what they need to learn the patterns and structures of the data. Once trained, these models can be used to generate new data that is similar in style, tone or content to the original data. For example, OpenAI’s GPT-3 model was trained on text databases from the internet, including 570GB of data obtained from books, webtexts, Wikipedia and articles.

One of the most popular generative AI tools is ChatGPT—a free chatbot that can generate an answer to almost any question it’s asked. Developed by OpenAI and released in November 2022, ChatGPT reached more than 100 million users in a little over two months. It is designed to work primarily in English. However, it also understands certain inputs in 95 other languages, including Spanish, French, Chinese, German and Irish.

Generative AI will change how we work

Today’s generative AI tools are much more sophisticated than chatbots. While they do train on language models, they also go much farther, training on image and video synthesis models, as well as music and art. This means generative AI has a wide range of applications, from content creation to product design to medical research.

For example, one OpenAI-based system, DALL-E, produces AI-generated art. When I asked it to generate a comic book image of a cyber attacker, it provided me with multiple options in just three seconds (see Figure 1).

Figure 1. Comic book strip of a cyber attacker accessing computer networks by DALL-E.

And then there’s GPT-4, which uses an advanced AI language model. GPT-4 can help businesses automate customer support because it can understand customer inquiries and answer common questions. It can also identify patterns that indicate fraudulent activity, such as unusual purchasing behavior.

Generative AI has the potential to assist professionals across a wide variety of industries. Financial advisers can use it to analyze market trends and consumer behaviors. Teachers can use AI-powered tools to analyze students’ learning needs and preferences to customize their lessons. And security analysts can use it to study user behavior and identify behavioral patterns that lead to data exfiltration. Recently, Paul McCartney told BBC Radio 4 that he used AI to extract John Lennon's voice from an old demo tape to create a final Beatles record.

Ways people use generative AI tools in the real world

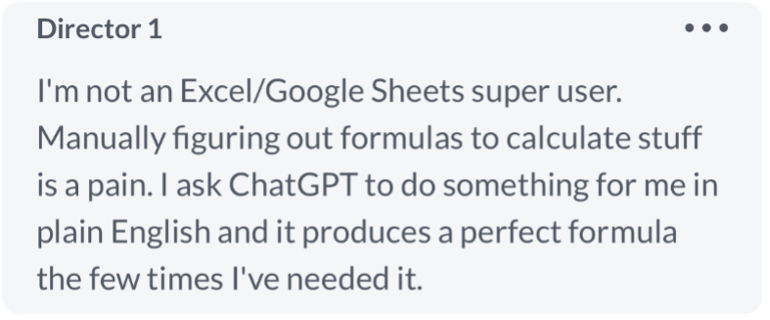

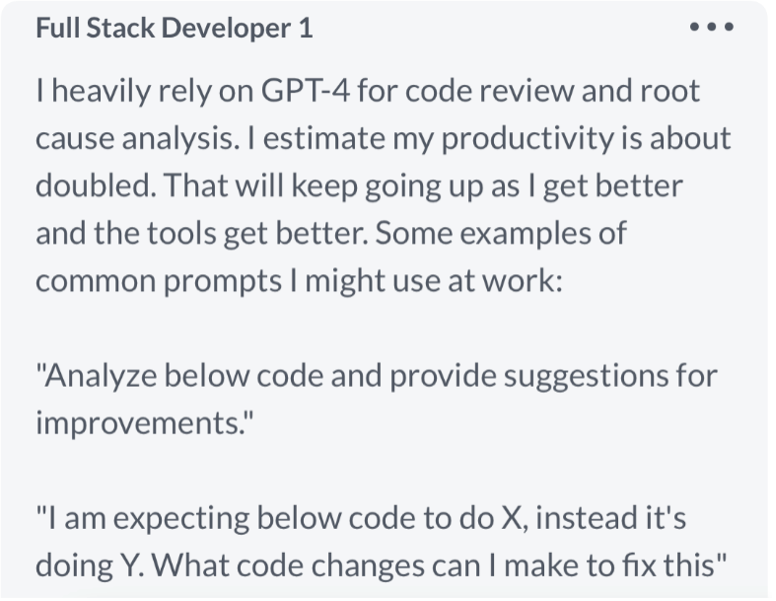

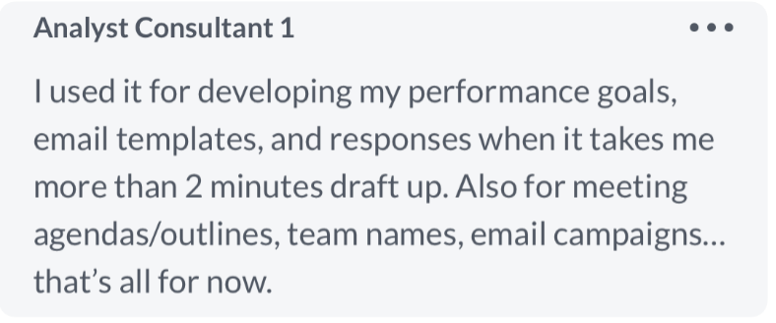

The following examples were taken from a messaging board where the original poster asked: “[I’m] looking for real-world ways that you are already integrating AI into your daily work…”:

Figure 2: Excerpt from a director’s answer, describing how generative AI helps with Excel formulas.

Figure 3: Excerpt from a full stack developer’s answer, describing how generative AI helps with code review.

Figure 4: Excerpt from an analyst consultant’s answer, describing how generative AI helps with daily tasks.

Risks are inherent with any new technology

Generative AI promises significant productivity gains. But like any revolutionary technology, there are also risks with generative AI. Here are a few:

- IP leakage. Take the case of “full stack developer 1” from figure 3 above. One has to wonder whether that piece of source code he reviewed in ChatGPT has now entered the public domain. And does OpenAI have a claim to that source code since its AI helped to develop it?

- Inaccurate information. Recently, a federal judge dismissed a lawsuit and issued a $5,000 fine to the plaintiff's lawyers after they used ChatGPT to research court filings that cited six fake cases invented by the generative AI tool.

- Bias. Because generative AI learns from existing content, it can be as biased—or maybe even more biased—than humans. In a recent study, Bloomberg observed that “Stable Diffusion’s text-to-image model amplifies stereotypes about race and gender.”

- Privacy compliance. Without an information protection platform, there is no automated way to stop employees from inputting personally identifiable data, such as health or financial data into generative AI tools.

Realizing these risks, developed countries are introducing AI regulations that demand transparency, human supervision and other safeguards from generative AI tools.

How to monitor generative AI use and prevent data loss

Yes, many businesses want to learn how their employees are using generative AI. But they also want to protect their IP and other sensitive data. Proofpoint Sigma information protection platform can help companies to monitor their users’ activities on generative AI services such as ChatGPT and prevent the loss of valuable corporate data.

By monitoring the use of generative AI services, you can learn from your employees how to adopt this technology to help increase productivity and innovation. In the meantime, you can take a people-centric approach to security and tighten security controls when you observe risky behaviors.

In our next blog, we’ll explore how Proofpoint can help you safeguard your data when it’s used in generative AI like ChatGPT.

Find out more

If you want to learn about AI and ML (AI/ML) in information security, join our Cybersecurity Leadership certification program. This program will teach you about the risks posed by AI/ML to cybersecurity as well as best practices for adopting AI/ML to protect your people and data more effectively. Deadline for enrollment is August 8th at 5 pm PST.

And be sure to check out the People Lose Data webinar series and our Proofpoint Sigma information protection platform brief to learn more about defending against data loss and insider threats.