Engineering Insights is an ongoing blog series that gives a behind-the-scenes look into the technical challenges, lessons and advances that help our customers protect people and defend data every day. Our engineers write each post, explaining the process that led up to a Proofpoint innovation.

NOTE: This blog post describes a solution that the author considers interesting from a technical perspective. However, the unit tests described in the post do not relate to any Proofpoint product and do not have a customer impact.

Recently, we encountered continuous integration (CI) build failures in two of our microservices, caused by Java unit tests.

Even though the Java heap size limit was appropriate relative to the resources of the Docker pod, each test generated a Kubernetes OOMKilled error. There were no errors in the logs and no core or heap dump file was generated.

The OOMKilled error messages mentioned the Netty C library. In both cases, the cause came from Google Cloud Platform (GCP) APIs and OpenTelemetry, which both use gRPC calls that rely on Netty under the hood. gRPC is a modern, open-source, high-performance remote procedure call (RPC) framework. During the tests, the gRPC calls failed because they were not expected to run and therefore not configured properly. Disabling the gRPC connections during the tests resolved this issue.

Although the gRPC connections should not have been enabled during the tests, it was still unclear why this situation resulted in OOMKilled errors. The specified Java heap size limit was appropriate relative to the Docker container.

Then, in this developer blog, I came across the following detail: “Netty uses ByteBuffers and direct memory to allocate and deallocate memory.” This made me realize that an unexpected increase in off-heap memory usage might be the cause of the OOMKilled errors.

The problem: an increase in off-heap memory usage

Let's look at an abstract example of how this could happen. In this example, we examine the Java memory usage of our application before and after the gRPC calls.

Java heap size

-Xms128m (initial heap size)

-Xmx256m (max heap size)

Kubernetes pod resource requests and limits

resources:

requests:

memory: 200M

limits:

memory: 360M

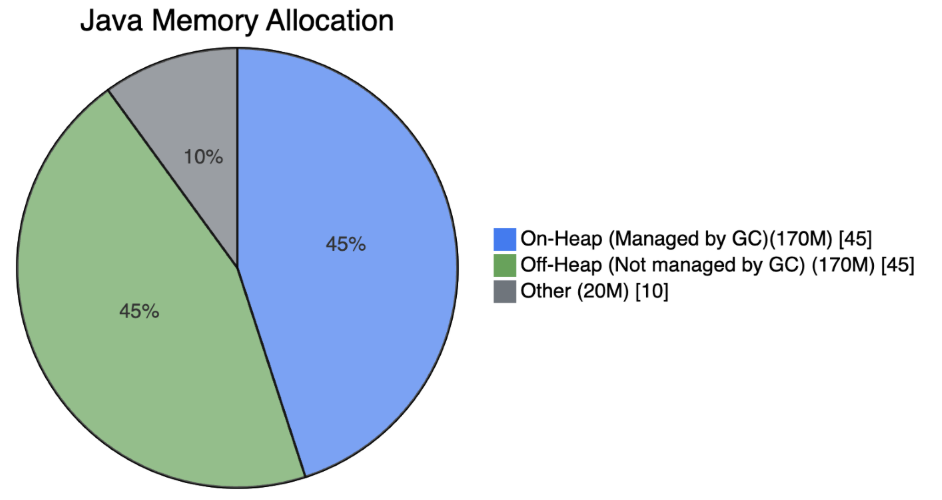

Figure 1: Java memory allocation before the gRPC calls - total 200M.

Figure 2: Java memory allocation after the gRPC calls - total 360M.

As shown in the example, the on-heap memory usage remains similar in size, but the off-heap memory usage increases significantly after the gRPC calls. Crucially, the maximum Java heap size is not exceeded, but the off-heap memory usage does exceed the pod memory limit. This happens because the off-heap memory is not managed by the Java virtual machine (JVM) garbage collector (GC). As a result, the off-heap memory can grow beyond the limits of the pod resources, resulting in the Kubernetes OOMKilled error.

The solution: configuring additional memory limits

gRPC is widely used in Google Cloud client libraries, OpenTelemetry, and more. When an application uses gRPC or a similar framework that uses significant native memory, it is important to know the off-heap memory usage and how it can affect the pod memory limit. Because the io.grpc package uses Netty, a similar thing can happen in a microservice. To avoid errors, some adjustments are required.

Commonly, the Java heap size is configured to be slightly less than the pod memory limit. You can configure this with the -Xms and -Xmx JVM options, or by using the MaxRAMPercentage JVM option; however, in situations such as the preceding example, the off-heap direct memory might exceed the pod memory limit. This results in the OOMKilled error.

NOTE: The GC needs some extra memory when performing a garbage collection. Two times the maximum heap size is the worst case value, but usually it is significantly less. This extra memory requirement means that the resident set size (RSS) might increase temporarily during the collection. This can cause a problem in any environment with memory constraints, such as a container.

A more controlled approach is to configure the direct memory limit as well and configure the pod memory limit to be the sum of the maximum heap and maximum off-heap (direct memory) values. You should also allow for other types of memory, such as Metaspace, classes and more. To control the native memory usage, you can set the MaxDirectMemorySize JVM option.

NOTE: Native Image may also allocate memory that is separate from the Java heap. One common use case is a java.nio.DirectByteBuffer that directly references the native memory -XX:MaxDirectMemorySize value. This value is the maximum size of direct buffer allocations.

Together with a matching pod resources configuration, these extra limits give you a more controlled approach. A full example is shown below.

Dockerfile

FROM openjdk...

...

ENTRYPOINT ["java", "-Xms512m", "-Xmx2g", "-XX:MaxDirectMemorySize=700m", "-jar", "/app/my-grpc-app.jar"]

Kubernetes pod resource requests and limits

resources:

requests:

memory: "2Gi" # minimum memory request

limits:

memory: "3Gi" # maximum memory limit

Join the team

At Proofpoint, our people—and the diversity of their lived experiences and backgrounds—are the driving force behind our success. We have a passion for protecting people, data, and brands from today’s advanced threats and compliance risks.

We hire the best people in the business to:

- Build and enhance our proven security platform

- Blend innovation and speed in a constantly evolving cloud architecture

- Analyze new threats and offer deep insight through data-driven intelligence

- Collaborate with our customers to help solve their toughest cybersecurity challenges

If you’re interested in learning more about career opportunities at Proofpoint, visit the careers page.

About the author

Liran Mendelovich is a senior software developer. His interests include all aspects of development—design and implementation, problem solving, performance optimization, concurrency, distributed systems and microservices—as well as open-source libraries.